Why I Stopped Writing Commit Messages

And you should too!

Recently I have been working on revect in my free time. It’s a project that uses AI embeddings and an MCP server so you can recall and persist any information.

The idea is to connect it to all upcoming AI systems, windows, cline, claude code, Claude desktop, and any other tools that support MCP servers. You can store anything you want and remember it using semantic search.

I’ll be writing more about AI and the learnings I’ve had as part of making this project in the coming weeks and months.

Let AI Write our Commit Messages

In this post, I’ll share a quick tip for anyone who is tired of being in a rush debugging something, and just writing “fix” or other useless commit messages out of frustration.

Enter Aider. It’s been out for quite a while, and it offers the ability to read your working git tree, send that info to a model, and have it spit out a good commit message. Sounds awesome, and it is. But there’s a few quick tips and quarks to know in order to set it up.

Aider Setup ⌨️

Aider is an AI pair programming tool that can be installed via pip, Python's package manager. To install it just run:

pip install aider-chat

in your terminal or command prompt. You can check their get started guide for more info.

Now, we need to add these env variables to our zsh or bash profile:

export LM_STUDIO_API_KEY=dummy-api-key

export LM_STUDIO_API_BASE=http://localhost:1234/v1

export AIDER_MODEL=lm_studio/devstral-small-2505-mlx

push() { aider --commit; git push; }

commit() {aider --commit;}For some reason, aider says it requires an api key, although LM Studio doesn’t need one. We will be installing this next, and it’s the server that actually hosts the model we will use for chats and commits.

Below this config, I made two functions to make this even faster for my daily workflow. What they both do is very self explanatory.

LM Studio 🖲️

You may be tempted to use Ollama for this, but as of this writing it has a couple major flaws and bugs that I cannot believe still exist in the project.

In my first attempt doing this, I was using Ollama, and I noticed it would not let me set the context window to 128k for the model we will be using. If i did set it, it would use 5x the ram it should be using, and then reset itself anytime aider ran…

Next, it would not keep the model in memory, even if I told it to. As soon as a request came in, it would reset to 4 minutes before going out of memory.

If you’re not familiar with what this means, if a model is unloaded, the next time you use it, you need to wait for it to be loaded into ram. I have 128gb of ram, so I like to keep it there for the moment I call it, as it can still take some time to finish depending on the context passed in.

Let’s install LM Studio from their website. Once done let’s move on to the next section.

Devstral 🎉

Now that we have Aider setup, and LM Studio, we just need to install Devstral. Do a search, and use the MLX version if you are on MacOS, this uses Apple’s low level inference engine which is slightly faster than regular GGUF’s.

This model came out last week from Mistral, and it’s currently the best agentic coder for local usage that I’ve used.

This model is awesome. It’s one of the first local models that can be utilized for tools like Cline.

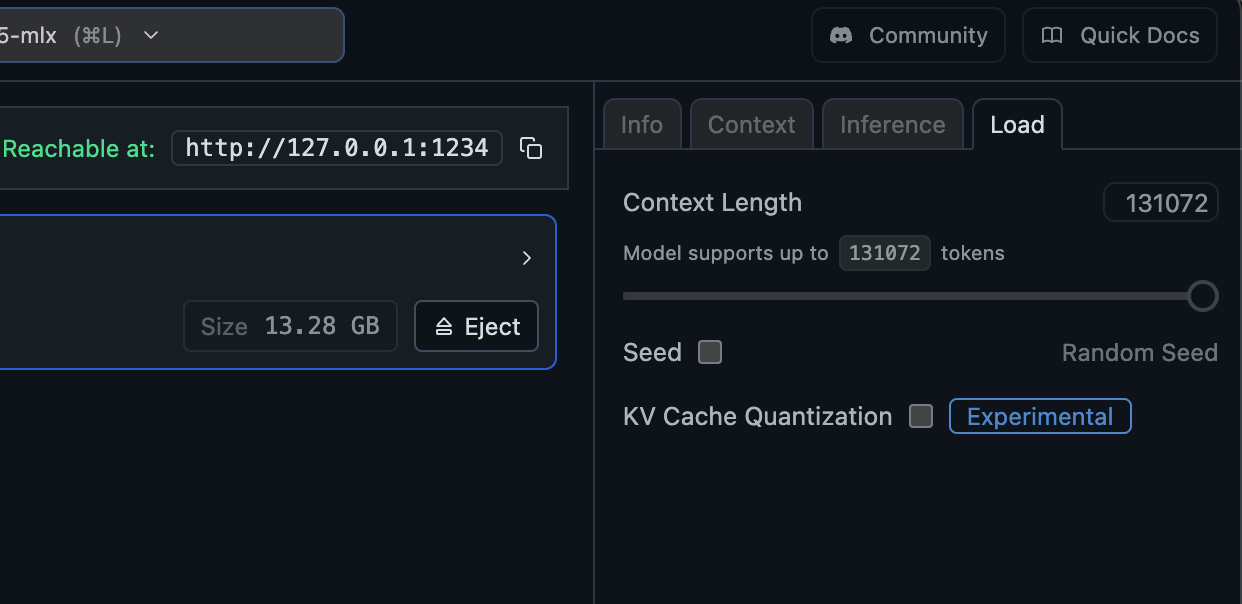

Now that we have it downloaded, go to the server tab, start it, and I like to use these settings:

If you have at least 15gb of ram free, you can use my same settings. If you do not have that much free ram at all times, I recommend enabling “Auto unload unused JIT loaded models” so they get released after you use them.

Also, don’t forget to increase the context side in the “load” tab on the right:

Otherwise, large commits will not have enough space to fit, and the message might make no sense.

Consider doing at least 20-30k context if you’re low on memory or compute.

If you have less available ram, one of the Qwen 3 models may work for you.

Let’s see it in action

Success!

In my experience this works quite well for smaller changes, larger ones will miss nuance.

If you want an even better commit message, setup Claude Code and it can make great summaries of even larger changes, much faster than this can run on your machine.

That being said, I find that squash and merge PR’s makes the PR title much more important than the individual commit messages, so I have been happy with using Aider and Devstral for this, on my m4 max laptop.

See you here in a week or two for more AI goodness.